Perceptron is a supervised learning binary classification algorithm that learns optimal weight coefficients that are multiplied with input features to decide whether they belong to one class or another.

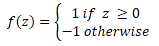

Defining the two classes in the binary classification as 1, a positive instance, or -1, a negative instance, we define a function f(z) where if z is larger than the threshold 0 we predict the class 1 and -1 otherwise.

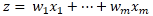

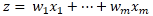

Function f(z) maps input values x with a corresponding weight vector w, where z is the dot product

or

or

The process of running the perceptron learning rule is:

- Initialize weights to 0 or small random numbers

- For each training sample, perform the following

- compute the output value

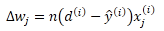

- Update the weights

Where: n is a learning weight, d is the correct target classification, y is a predicted classification and x is an input.

When the change in weight equals zero it means we have predicted the correct class.

Convergence of the perceptron is only guaranteed if the two classes are linearly separable and the learning rate is sufficiently small. Therefore, we need to set a number of iterations over the training dataset as otherwise the perceptron would never stop updating the weights.